Introduction

Just because the election for 2017 crop of Hall of Famers only finished 5 months ago doesn’t mean it’s too early to start wondering which current major leaguers will be enshrined in Cooperstown someday. In fact it’s only 5 months until the 2018 ballot is released so I think now is quite a felicitous time to examine previous ballots. Two questions I want to answer at the end of this post are:

- Who got elected into the HoF and how similar/different were they from those who didn’t?

- Can we predict whose who will make it to the HoF?

Although there are four main position players can assume in a game of baseball: Infielders, Outfielders, Pitchers, and Catchers, the focus of this post will be on Infielders and Outfielders. This is one of the projects I did for Udacity’s Data Analyst Nanodegree.

Background information

The National Baseball Hall of Fame and Museum is located in Cooperstown, New York and was dedicated in 1939. A baseball player can be elected to the Hall of Fame if they meet the following criteria:

- The player must have competed in at least ten seasons;

- The player has been retired for at least five seasons;

- A screening committee must approve the player’s worthiness to be included on the ballot and most players who played regularly for ten or more years are deemed worthy;

- The player must not be on the ineligible list (that means that the player should not be banned from baseball);

- A player is considered elected if he receives at least 75% of the vote in the election; and

- A player stays on the ballot the following year if they receive at least 5% of the vote and can appear on ballots for a maximum of 10 years.

These criteria tell us what information we need to gather before answering our questions, namely how long each player competed, when they retired, whether they have been banned from the game, etc. In the next part, we are going to find out where to obtain these information.

Dataset

The 2017 version of Lahman’s Baseball Database contains complete batting and pitching statistics from 1871 to 2017, plus fielding statistics, standings, team stats, managerial records, post-season data, and more. The full database, as comma-separated files, can be downloaded from here. However, for our predictions, we only need the following .csv files:

- Master.csv;

- Batting.csv;

- Fielding.csv;

- Teams.csv;

- AwardsPlayers.csv;

- AllstarFull.csv;

- Appearances.csv; and last but not least

- HallOfFame.csv.

Unfortunately, it’s not possible to tell if a player has been banned from baseball from this database, but we can always look it up on the net. Next we will read in the data and clean them.

Data Cleaning and Pre-processing

# Import data to DataFrames

%matplotlib inline

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

# Read in the CSV files

master_df = pd.read_csv('Master.csv',usecols=['playerID','nameFirst','nameLast','bats','throws','finalGame'])

fielding_df = pd.read_csv('Fielding.csv',usecols=['playerID','A','E','DP'])

batting_df = pd.read_csv('Batting.csv', usecols = ['playerID', 'AB', 'R', 'H', '2B', '3B', 'HR', 'RBI',\

'SB', 'CS', 'BB','SO', 'IBB', 'HBP', 'SH', 'SF'])

teams_df = pd.read_csv('Teams.csv')

awards_df = pd.read_csv('AwardsPlayers.csv', usecols=['playerID','awardID','yearID'])

allstar_df = pd.read_csv('AllstarFull.csv', usecols=['playerID','yearID'])

hof_df = pd.read_csv('HallOfFame.csv',usecols=['playerID','yearid','votedBy','needed_note','inducted','category'])

appearances_df = pd.read_csv('Appearances.csv')In general, we are only interested in players elected by the BBWAA, but we should also include two players (Roberto Clemente and Lou Gehrig) who were elected via “Special Election”, because they clearly had Hall of Fame stats, but simply bypassed the process due to untimely circumstances.

Moreover, there were three occasions - in 1949, 1964, and 1967 - when the BBWAA conducted a special run-off election whereby the one player who received the most run-off votes would be elected to the HoF, so we should include players who got elected with a run-off ballot as well.

hof = hof_df[((hof_df['votedBy'] == 'BBWAA') | (hof_df['votedBy'] == 'Special Election')) | (hof_df['votedBy'] == 'Run Off')]

hof = hof[(hof['category'] == 'Player') & (hof['inducted'] == 'Y')]

# Drop these columns as no longer useful

hof = hof.drop(['category', 'inducted', 'needed_note', 'yearid'], axis = 1)

# Convert `votedBy` column to numeric

hof['votedBy'] = 1

# Give `votedBy` column a better name

hof.rename(columns = {'votedBy':'HoF'}, inplace = True)Next we’ll gather information about each player’s performance, starting with batting statistics:

# Group by playerID

batting = batting_df.groupby('playerID', as_index = False).sum()

batting = batting.fillna(0)

batting.head()| playerID | AB | R | H | 2B | 3B | HR | RBI | SB | CS | BB | SO | IBB | HBP | SH | SF | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | aardsda01 | 4 | 0 | 0 | 0 | 0 | 0 | 0.0 | 0.0 | 0.0 | 0 | 2.0 | 0.0 | 0.0 | 1.0 | 0.0 |

| 1 | aaronha01 | 12364 | 2174 | 3771 | 624 | 98 | 755 | 2297.0 | 240.0 | 73.0 | 1402 | 1383.0 | 293.0 | 32.0 | 21.0 | 121.0 |

| 2 | aaronto01 | 944 | 102 | 216 | 42 | 6 | 13 | 94.0 | 9.0 | 8.0 | 86 | 145.0 | 3.0 | 0.0 | 9.0 | 6.0 |

| 3 | aasedo01 | 5 | 0 | 0 | 0 | 0 | 0 | 0.0 | 0.0 | 0.0 | 0 | 3.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 4 | abadan01 | 21 | 1 | 2 | 0 | 0 | 0 | 0.0 | 0.0 | 1.0 | 4 | 5.0 | 0.0 | 0.0 | 0.0 | 0.0 |

The columns have somewhat cryptic names, but you can always take a look at the information at the README page to see what they mean.

Next up is fielding statistics:

# Group by playerID

fielding = fielding_df.groupby('playerID', as_index = False).sum()

fielding = fielding.fillna(0)

fielding.head()| playerID | A | E | DP | |

|---|---|---|---|---|

| 0 | aardsda01 | 29.0 | 3.0 | 2.0 |

| 1 | aaronha01 | 429.0 | 144.0 | 218.0 |

| 2 | aaronto01 | 113.0 | 22.0 | 124.0 |

| 3 | aasedo01 | 135.0 | 13.0 | 10.0 |

| 4 | abadan01 | 1.0 | 1.0 | 3.0 |

Next, we will look at the allstar_df DataFrame. It contains information on which players made appearances in Allstar games. The Allstar game is an exhibition game played each year at mid-season. Major League Baseball consists of two leagues: the American league and the National league. The top 25 players from each league are selected to represent their league in the Allstar game. Hence to make appearances in the Allstar game is quite an achievement and we want to know how many Allstar games each player has participated in.

allstar = allstar_df.groupby('playerID').count().reset_index()It might be a good idea to rename the column ‘yearID’ to something else to avoid confusion.

allstar.rename(columns = {'yearID':'years_allstar'}, inplace = True)Next up is awards_df, let’s see how many different awards there are

awards_df['awardID'].unique()array(['Pitching Triple Crown', 'Triple Crown',

'Baseball Magazine All-Star', 'Most Valuable Player',

'TSN All-Star', 'TSN Guide MVP',

'TSN Major League Player of the Year', 'TSN Pitcher of the Year',

'TSN Player of the Year', 'Rookie of the Year', 'Babe Ruth Award',

'Lou Gehrig Memorial Award', 'World Series MVP', 'Cy Young Award',

'Gold Glove', 'TSN Fireman of the Year', 'All-Star Game MVP',

'Hutch Award', 'Roberto Clemente Award', 'Rolaids Relief Man Award',

'NLCS MVP', 'ALCS MVP', 'Silver Slugger', 'Branch Rickey Award',

'Hank Aaron Award', 'TSN Reliever of the Year',

'Comeback Player of the Year', 'Outstanding DH Award',

'Reliever of the Year Award'], dtype=object)

That’s a lot of awards, but not all of them are correlated with being voted into HoF. In fact, let’s just focus on the more important ones, namely: Most Valuable Player, Rookie of the Year, Gold Glove, Silver Slugger, and World Series MVP awards. (Cy Young, though being a major award, is only for pitchers and thus excluded.) Now we need to count how many different awards each player managed to win.

# Keeping only important awards

awards_list = ['Most Valuable Player','Rookie of the Year','Gold Glove','Silver Slugger','World Series MVP']

awards = awards_df[awards_df['awardID'].isin(awards_list)]

# Pivot the data frame to count the number of different awards

awards = awards.pivot_table(index = 'playerID', columns = 'awardID', aggfunc='count')

# Flatten the pivot table

awards = pd.DataFrame(awards.to_records())Notice that we have inadvertently introduced a decent number of NA values that are actually zeros when making the pivot table, so we’ll have to replace them accordingly. We have also changed the column names as a result of flattening our pivot table. The simplest way to fix this is by string match-and-replace.

# Fix column names after flattening

awards.columns = [col.replace("('yearID', '", "").replace("')", "") \

for col in awards.columns]

awards = awards.fillna(0)At this point we have gathered quite a decent amount of information on players’ statistics, it’s a good idea to try and compile them together:

player_stats = batting.merge(fielding, on = 'playerID', how ='left')

player_stats = player_stats.merge(allstar, on = 'playerID', how ='left')

player_stats = player_stats.merge(awards, on = 'playerID', how ='left')

player_stats = player_stats.merge(hof, on = 'playerID', how ='left')

player_stats = player_stats.fillna(0)

player_stats.head()| playerID | AB | R | H | 2B | 3B | HR | RBI | SB | CS | ... | A | E | DP | years_allstar | Gold Glove | Most Valuable Player | Rookie of the Year | Silver Slugger | World Series MVP | HoF | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | aardsda01 | 4 | 0 | 0 | 0 | 0 | 0 | 0.0 | 0.0 | 0.0 | ... | 29.0 | 3.0 | 2.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 1 | aaronha01 | 12364 | 2174 | 3771 | 624 | 98 | 755 | 2297.0 | 240.0 | 73.0 | ... | 429.0 | 144.0 | 218.0 | 25.0 | 3.0 | 1.0 | 0.0 | 0.0 | 0.0 | 1.0 |

| 2 | aaronto01 | 944 | 102 | 216 | 42 | 6 | 13 | 94.0 | 9.0 | 8.0 | ... | 113.0 | 22.0 | 124.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 3 | aasedo01 | 5 | 0 | 0 | 0 | 0 | 0 | 0.0 | 0.0 | 0.0 | ... | 135.0 | 13.0 | 10.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 4 | abadan01 | 21 | 1 | 2 | 0 | 0 | 0 | 0.0 | 0.0 | 1.0 | ... | 1.0 | 1.0 | 3.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

5 rows × 26 columns

We also need to know when a player played their last game, these can be found in the master_df DataFrame:

master_df.head()| playerID | nameFirst | nameLast | bats | throws | finalGame | |

|---|---|---|---|---|---|---|

| 0 | aardsda01 | David | Aardsma | R | R | 2015-08-23 |

| 1 | aaronha01 | Hank | Aaron | R | R | 1976-10-03 |

| 2 | aaronto01 | Tommie | Aaron | R | R | 1971-09-26 |

| 3 | aasedo01 | Don | Aase | R | R | 1990-10-03 |

| 4 | abadan01 | Andy | Abad | L | L | 2006-04-13 |

Data in bats and throws columns are binary values with R (L) indicating a player’s batting/throwing hand is his right (left), so it’s much simpler to represent the information with 0-1 integers.

# Create a function to convert the `bats` and `throws` colums to numeric

def bats_throws(col):

if col == "R":

return 1

else:

return 0

#player_stats['bats_R'] = player_stats['bats'].apply(bats_throws)

#player_stats['throws_R'] = player_stats['throws'].apply(bats_throws)

master_df['bats_R'] = master_df['bats'].apply(bats_throws)

master_df['throws_R'] = master_df['throws'].apply(bats_throws)

# Drop the old columns

master_df = master_df.drop(['bats','throws'], axis = 1)Moreover, the debut and finalGame columns are currently strings so we’ll need to convert them to datetime object and extract the year, since we don’t need details as granular as date and month.

from datetime import datetime

def getYear(datestring):

return datetime.strptime(datestring, '%Y-%m-%d').year

# Drop rows that have NA values

master = master_df.dropna(subset = ['finalGame'])

# Get years from strings

master = master.join(master['finalGame'].map(getYear), lsuffix='_')

master = master.drop('finalGame_', axis = 1)master.head()| playerID | nameFirst | nameLast | bats_R | throws_R | finalGame | |

|---|---|---|---|---|---|---|

| 0 | aardsda01 | David | Aardsma | 1 | 1 | 2015 |

| 1 | aaronha01 | Hank | Aaron | 1 | 1 | 1976 |

| 2 | aaronto01 | Tommie | Aaron | 1 | 1 | 1971 |

| 3 | aasedo01 | Don | Aase | 1 | 1 | 1990 |

| 4 | abadan01 | Andy | Abad | 0 | 0 | 2006 |

Next up is the appearances_df DataFrame. This contains information on how many appearances each player had at each position for each year and will tell us how long a player has competed in the game. Let’s take a look at the first few rows of the DataFrame to see what we have.

appearances_df.head()| yearID | teamID | lgID | playerID | G_all | GS | G_batting | G_defense | G_p | G_c | ... | G_2b | G_3b | G_ss | G_lf | G_cf | G_rf | G_of | G_dh | G_ph | G_pr | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1871 | TRO | NaN | abercda01 | 1 | NaN | 1 | 1 | 0 | 0 | ... | 0 | 0 | 1 | 0 | 0 | 0 | 0 | NaN | NaN | NaN |

| 1 | 1871 | RC1 | NaN | addybo01 | 25 | NaN | 25 | 25 | 0 | 0 | ... | 22 | 0 | 3 | 0 | 0 | 0 | 0 | NaN | NaN | NaN |

| 2 | 1871 | CL1 | NaN | allisar01 | 29 | NaN | 29 | 29 | 0 | 0 | ... | 2 | 0 | 0 | 0 | 29 | 0 | 29 | NaN | NaN | NaN |

| 3 | 1871 | WS3 | NaN | allisdo01 | 27 | NaN | 27 | 27 | 0 | 27 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | NaN | NaN | NaN |

| 4 | 1871 | RC1 | NaN | ansonca01 | 25 | NaN | 25 | 25 | 0 | 5 | ... | 2 | 20 | 0 | 1 | 0 | 0 | 1 | NaN | NaN | NaN |

5 rows × 21 columns

# Drop unnecessary columns

appearances_df = appearances_df.drop(['G_ph', 'G_pr'], axis = 1)

appearances = appearances_df.groupby(['playerID'], as_index = False).sum()

appearances = appearances.fillna(0)appearances_df.columnsIndex(['yearID', 'teamID', 'lgID', 'playerID', 'G_all', 'GS', 'G_batting',

'G_defense', 'G_p', 'G_c', 'G_1b', 'G_2b', 'G_3b', 'G_ss', 'G_lf',

'G_cf', 'G_rf', 'G_of', 'G_dh'],

dtype='object')

As mentioned earlier, this post is focused on infielders and outfielders only, so we need to pick players who only play at these positions. However, some players played at multiple different positions in the earlier years of MLB, so how are we going to filter out pitchers and catchers? There are no hard and fast rules, but we can convert these numbers into percentages and exclude people who played more than, say, 10% of their games at either of these positions.

positions = appearances_df.columns[5:]

# Loop through the list and divide each column by the players total games played

for col in positions:

column = col + '_percent'

appearances[column] = appearances[col] / appearances['G_all'] # Eliminate players who played 10% or more of their games as Pitchers or Catchers

appearances = appearances[(appearances['G_p_percent'] < 0.1) & (appearances['G_c_percent'] < 0.1)]

# Drop columns that are no longer useful

appearances = appearances.drop(['G_p_percent','G_c_percent', 'yearID'], axis = 1)

appearances = appearances.drop(positions.tolist(), axis = 1)appearances.head()| playerID | G_all | GS_percent | G_batting_percent | G_defense_percent | G_1b_percent | G_2b_percent | G_3b_percent | G_ss_percent | G_lf_percent | G_cf_percent | G_rf_percent | G_of_percent | G_dh_percent | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | aaronha01 | 3298 | 0.962098 | 1.0 | 0.905094 | 0.063675 | 0.013038 | 0.002122 | 0.000000 | 0.095512 | 0.093390 | 0.659187 | 0.836871 | 0.060946 |

| 2 | aaronto01 | 437 | 0.471396 | 1.0 | 0.791762 | 0.530892 | 0.016018 | 0.022883 | 0.000000 | 0.308924 | 0.002288 | 0.004577 | 0.313501 | 0.000000 |

| 4 | abadan01 | 15 | 0.266667 | 1.0 | 0.600000 | 0.533333 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.066667 | 0.066667 | 0.000000 |

| 6 | abadijo01 | 12 | 0.000000 | 1.0 | 1.000000 | 1.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| 7 | abbated01 | 855 | 0.000000 | 1.0 | 1.000000 | 0.000000 | 0.490058 | 0.023392 | 0.453801 | 0.000000 | 0.002339 | 0.001170 | 0.003509 | 0.000000 |

The data look fine but… how are we going to deal with the years? It is unlikely that the number 1950 will have the same relationship to the rest of the data that the model will infer, so is it OK to drop them like we just did?

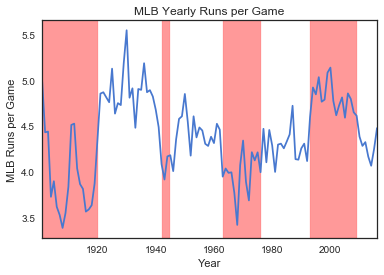

No it’s not. It turns out as MLB progressed, different eras emerged where the amount of runs per game increased or decreased significantly. This means that when a player played has a large influence on that player’s career statistics. The HoF voters take this into account when voting players in, so our model needs that information too. To get information such as runs allowed and games played over the years, we need to turn to the teams_df DataFrame.

We only need to consider columns needed to calculate runs per game per year, the rest we can safely ignore. Also looking back at the history of MLB, the rules of baseball had not settled into place before 1900 and the game was a totally different beast back then so it makes sense to remove these rows from the data.

# Runs and games per year

runs_games = teams_df.groupby('yearID').sum()[['G','R']]

runs_games['RPG'] = runs_games['R'] / runs_games['G']

runs_games = runs_games.loc[1901:]

runs_games.head()| G | R | RPG | |

|---|---|---|---|

| yearID | |||

| 1901 | 2220 | 11068 | 4.985586 |

| 1902 | 2230 | 9883 | 4.431839 |

| 1903 | 2228 | 9892 | 4.439856 |

| 1904 | 2498 | 9307 | 3.725781 |

| 1905 | 2474 | 9640 | 3.896524 |

# Plot number of runs per game over time

runs_games['RPG'].plot()

plt.title('MLB Yearly Runs per Game')

plt.xlabel('Year')

plt.ylabel('MLB Runs per Game')

plt.axvspan(1901, 1920, color='red', alpha=0.4)

plt.axvspan(1942, 1945, color='red', alpha=0.4)

plt.axvspan(1963, 1976, color='red', alpha=0.4)

plt.axvspan(1993, 2009, color='red', alpha=0.4)<matplotlib.patches.Polygon at 0x178c12390>

There were indeed some periods when the number of runs per game was much higher than others. For example, the years from 1920 - 1941 saw an unprecedented high number of runs scored per game and was often referred to as the Lively Ball Era. Another sharp rise in runs per game occurred during early ’90s to 2008, the Steroid Era. To capture this information, we need to convert years to eras in our player_stats DataFrame and turn them into new features (columns). We can re-use part of the codes we wrote for awards_df to accomplish this.

yr_appearances = appearances_df.copy()[['yearID','playerID','teamID']]

# Remove players in or before 1900

yr_appearances = yr_appearances[yr_appearances['yearID'] > 1900]

def toEra(year):

if int(year) < 1921:

return '1901-1920'

elif int(year) < 1942:

return '1921-1941'

elif int(year) < 1946:

return '1942-1945'

elif int(year) < 1963:

return '1946-1962'

elif int(year) < 1977:

return '1963-1976'

elif int(year) < 1993:

return '1977-1992'

elif int(year) < 2010:

return '1993-2009'

else:

return 'post2009'

yr_appearances['yearID'] = yr_appearances['yearID'].map(toEra)

# Pivot the data frame to count the number of different awards

yr_appearances = yr_appearances.pivot_table(index='playerID', columns = 'yearID', aggfunc='count')

# Flatten the pivot table

yr_appearances = pd.DataFrame(yr_appearances.to_records())

# Fix column names after flattening

yr_appearances.columns = [col.replace("('teamID', '", "").replace("')", "") \

for col in yr_appearances.columns]

yr_appearances = yr_appearances.fillna(0)

# Number of years playing

yr_appearances['years_playing'] = yr_appearances.sum(axis = 1)

yr_appearances = yr_appearances.merge(appearances, on = 'playerID', how = 'inner')

yr_appearances.head()| playerID | 1901-1920 | 1921-1941 | 1942-1945 | 1946-1962 | 1963-1976 | 1977-1992 | 1993-2009 | post2009 | years_playing | ... | G_defense_percent | G_1b_percent | G_2b_percent | G_3b_percent | G_ss_percent | G_lf_percent | G_cf_percent | G_rf_percent | G_of_percent | G_dh_percent | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | aaronha01 | 0.0 | 0.0 | 0.0 | 9.0 | 14.0 | 0.0 | 0.0 | 0.0 | 23.0 | ... | 0.905094 | 0.063675 | 0.013038 | 0.002122 | 0.000000 | 0.095512 | 0.093390 | 0.659187 | 0.836871 | 0.060946 |

| 1 | aaronto01 | 0.0 | 0.0 | 0.0 | 1.0 | 6.0 | 0.0 | 0.0 | 0.0 | 7.0 | ... | 0.791762 | 0.530892 | 0.016018 | 0.022883 | 0.000000 | 0.308924 | 0.002288 | 0.004577 | 0.313501 | 0.000000 |

| 2 | abadan01 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 3.0 | 0.0 | 3.0 | ... | 0.600000 | 0.533333 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.066667 | 0.066667 | 0.000000 |

| 3 | abbated01 | 8.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 8.0 | ... | 1.000000 | 0.000000 | 0.490058 | 0.023392 | 0.453801 | 0.000000 | 0.002339 | 0.001170 | 0.003509 | 0.000000 |

| 4 | abbotje01 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 5.0 | 0.0 | 5.0 | ... | 0.793991 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.270386 | 0.347639 | 0.236052 | 0.793991 | 0.051502 |

5 rows × 23 columns

Now that we have gathered pretty much all necessary information, it’s time for a final merge. It’s likely that new NA values will be created as a result of merging, so we need to check if there are any of them as well.

df = master.merge(player_stats, on = 'playerID', how ='left')

df = df.merge(yr_appearances, on = 'playerID', how ='inner')

df.info()<class 'pandas.core.frame.DataFrame'>

Int64Index: 7237 entries, 0 to 7236

Data columns (total 53 columns):

playerID 7237 non-null object

nameFirst 7235 non-null object

nameLast 7237 non-null object

bats_R 7237 non-null int64

throws_R 7237 non-null int64

finalGame 7237 non-null int64

AB 7237 non-null float64

R 7237 non-null float64

H 7237 non-null float64

2B 7237 non-null float64

3B 7237 non-null float64

HR 7237 non-null float64

RBI 7237 non-null float64

SB 7237 non-null float64

CS 7237 non-null float64

BB 7237 non-null float64

SO 7237 non-null float64

IBB 7237 non-null float64

HBP 7237 non-null float64

SH 7237 non-null float64

SF 7237 non-null float64

A 7237 non-null float64

E 7237 non-null float64

DP 7237 non-null float64

years_allstar 7237 non-null float64

Gold Glove 7237 non-null float64

Most Valuable Player 7237 non-null float64

Rookie of the Year 7237 non-null float64

Silver Slugger 7237 non-null float64

World Series MVP 7237 non-null float64

HoF 7237 non-null float64

1901-1920 7237 non-null float64

1921-1941 7237 non-null float64

1942-1945 7237 non-null float64

1946-1962 7237 non-null float64

1963-1976 7237 non-null float64

1977-1992 7237 non-null float64

1993-2009 7237 non-null float64

post2009 7237 non-null float64

years_playing 7237 non-null float64

G_all 7237 non-null int64

GS_percent 7237 non-null float64

G_batting_percent 7237 non-null float64

G_defense_percent 7237 non-null float64

G_1b_percent 7237 non-null float64

G_2b_percent 7237 non-null float64

G_3b_percent 7237 non-null float64

G_ss_percent 7237 non-null float64

G_lf_percent 7237 non-null float64

G_cf_percent 7237 non-null float64

G_rf_percent 7237 non-null float64

G_of_percent 7237 non-null float64

G_dh_percent 7237 non-null float64

dtypes: float64(46), int64(4), object(3)

memory usage: 3.0+ MB

The only column that has NA values is nameFirst, and since there are only two of them, let’s not worry about these. We have finally consolidated everything into a single DataFrame with everything we need to know about the players. In the next step, we are going to draw some insights from the data by adding new features.

df.head()| playerID | nameFirst | nameLast | bats_R | throws_R | finalGame | AB | R | H | 2B | ... | G_defense_percent | G_1b_percent | G_2b_percent | G_3b_percent | G_ss_percent | G_lf_percent | G_cf_percent | G_rf_percent | G_of_percent | G_dh_percent | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | aaronha01 | Hank | Aaron | 1 | 1 | 1976 | 12364.0 | 2174.0 | 3771.0 | 624.0 | ... | 0.905094 | 0.063675 | 0.013038 | 0.002122 | 0.000000 | 0.095512 | 0.093390 | 0.659187 | 0.836871 | 0.060946 |

| 1 | aaronto01 | Tommie | Aaron | 1 | 1 | 1971 | 944.0 | 102.0 | 216.0 | 42.0 | ... | 0.791762 | 0.530892 | 0.016018 | 0.022883 | 0.000000 | 0.308924 | 0.002288 | 0.004577 | 0.313501 | 0.000000 |

| 2 | abadan01 | Andy | Abad | 0 | 0 | 2006 | 21.0 | 1.0 | 2.0 | 0.0 | ... | 0.600000 | 0.533333 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.066667 | 0.066667 | 0.000000 |

| 3 | abbated01 | Ed | Abbaticchio | 1 | 1 | 1910 | 3044.0 | 355.0 | 772.0 | 99.0 | ... | 1.000000 | 0.000000 | 0.490058 | 0.023392 | 0.453801 | 0.000000 | 0.002339 | 0.001170 | 0.003509 | 0.000000 |

| 4 | abbotje01 | Jeff | Abbott | 1 | 0 | 2001 | 596.0 | 82.0 | 157.0 | 33.0 | ... | 0.793991 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.270386 | 0.347639 | 0.236052 | 0.793991 | 0.051502 |

5 rows × 53 columns

Feature Engineering

We’ll start by adding important baseball statistics such as batting average, on-base percentage, slugging percentage, and on-base plus slugging percentage, using the following formulas:

- Batting Ave. = Hits / At Bats

- Plate Appearances = At Bats + Walks + Sacrifice Flys & Hits + Hit by Pitch

- On-base = (Hits + Walks + Hit by Pitch) / Plate Appearances

- Slugging = ((Home Runs x 4) + (Triples x 3) + (Doubles x 2) + Singles) / At Bats

- On-Base plus Slugging = On-base + Slugging

Since we are computing a lot of ratios, NA values may come about which we’ll have to remove

# Create Batting Average (`AVE`) column

df['AVE'] = df['H'] / df['AB']

# Create On Base Percent (`OBP`) column

plate_appearances = (df['AB'] + df['BB'] + df['SF'] + df['SH'] + df['HBP'])

df['OBP'] = (df['H'] + df['BB'] + df['HBP']) / plate_appearances

# Create Slugging Percent (`Slug_Percent`) column

single = ((df['H'] - df['2B']) - df['3B']) - df['HR']

df['Slug_Percent'] = ((df['HR'] * 4) + (df['3B'] * 3) + (df['2B'] * 2) + single) / df['AB']

# Create On Base plus Slugging Percent (`OPS`) column

hr = df['HR'] * 4

triple = df['3B'] * 3

double = df['2B'] * 2

df['OPS'] = df['OBP'] + df['Slug_Percent']df = df.dropna()

print(df.isnull().sum(axis=0).tolist())[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0]

Yass! Now we are free of the NA plague. Before we move on to create some plots, let’s try to identify the outliers in our data. They are players who boasted HoF-worthy stats but were ignored by HoF voters due to match-fixing scandals and performance enhancing drugs (PED) allegations. To find out who they were, we need to read up on the history of MLB. The following articles contain may help us in that regard:

- List of people banned from Major League Baseball

- These 11 Players’ Hall of Fame Inductions Have Been Sabotaged by Steroid Allegations and Admissions

- Top 15 Baseball Players Who Have Used Performance Enhancing Drugs

Once we have done our homework, it’s time to remove these names from our data.

players = ['jacksjo01', 'rosepe01', 'giambja01', 'sheffga01', 'braunry02', 'bondsba01', \

'palmera01', 'mcgwima01', 'clemero02', 'sosasa01', 'rodrial01']

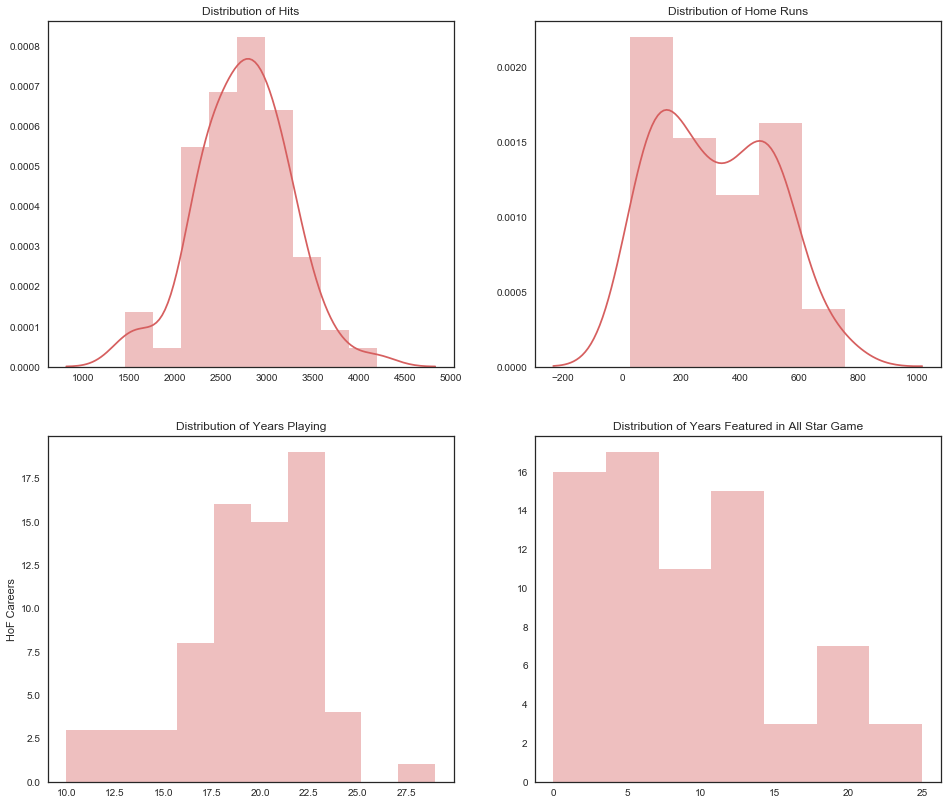

df = df[~df['playerID'].isin(players)]Next, we will plot out the distributions for certain statistics such as Hits, Home Runs, Years Playing, and Years Featured in All Star Game for Hall of Fame players to see if there are any trends among them.

# Filter players who are in HoF

df_hof = df[df['HoF'] == 1]

print(len(df_hof))

sns.set(style="white", palette="muted", color_codes=True)

# Initialize the figure and add subplots

fig = plt.figure(figsize=(16, 14))

ax1 = fig.add_subplot(2,2,1)

ax2 = fig.add_subplot(2,2,2)

ax3 = fig.add_subplot(2,2,3)

ax4 = fig.add_subplot(2,2,4)

# Create distribution plots for Hits, Home Runs, Years Played and All Star Games

sns.distplot(df_hof['H'], ax = ax1, kde = True, axlabel = False, color = 'r')

ax1.set_title('Distribution of Hits')

sns.distplot(df_hof['HR'], ax = ax2, kde = True, axlabel = False, color = 'r')

ax2.set_title('Distribution of Home Runs')

sns.distplot(df_hof['years_playing'], ax = ax3, kde = False, axlabel = False, color = 'r')

ax3.set_title('Distribution of Years Playing')

ax3.set_ylabel('HoF Careers')

sns.distplot(df_hof['years_allstar'], ax = ax4, kde = False, axlabel = False, color = 'r')

ax4.set_title('Distribution of Years Featured in All Star Game')72

<matplotlib.text.Text at 0x17dcdcbe0>

We have 70 Hall of Famers in our data and they all boast admirable statistics. A few points to note:

- High number of Hits seem to be favorable: Most HoF players scored on average 3000 hits.

- Home Run is not so important: The majority of inductees didn’t hit more than 200 home runs in their career.

- With experience comes votes: Players who have competed in more than 20 seasons make up a large portion of Hall of Famers.

- All Star Game appearances don’t have much weight: In fact most players inducted only have participated in less than 10 games.

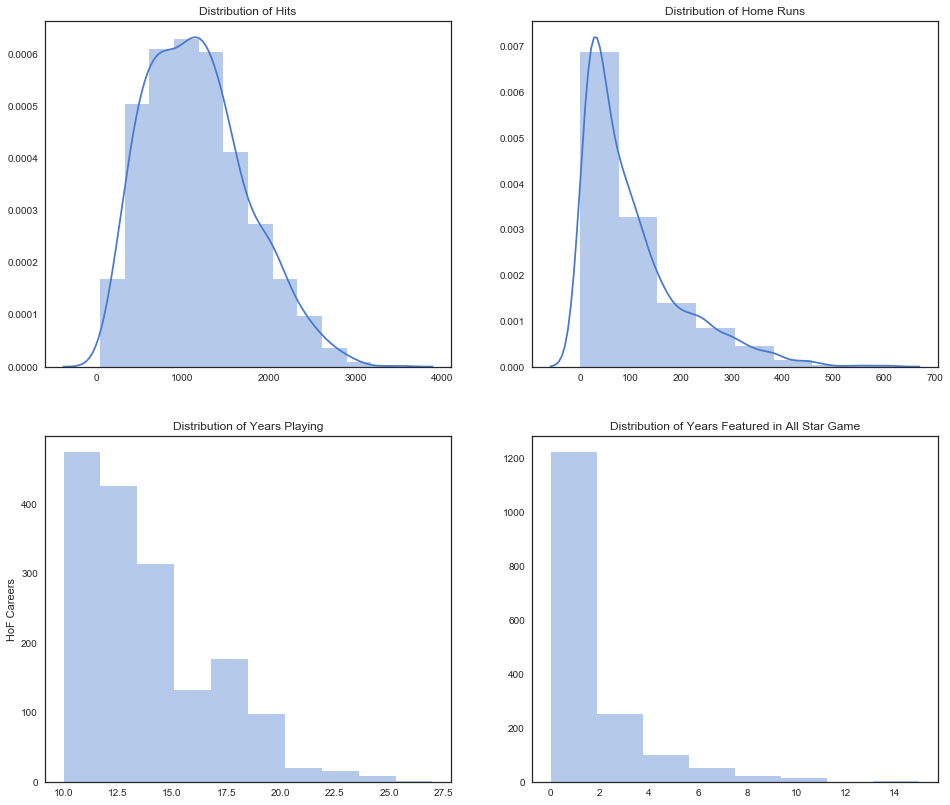

Now let’s see how they fare against non-HoF players. To ensure we are comparing apples to apples, let’s exclude non-HoF players with less than 10 years of experience.

# Filter `df` for players with 10 or more years of experience

df_10 = df[(df['years_playing'] >= 10) & (df['HoF'] == 0)]

print(len(df_10))

# Initialize the figure and add subplots

fig = plt.figure(figsize=(16, 14))

ax1 = fig.add_subplot(2,2,1)

ax2 = fig.add_subplot(2,2,2)

ax3 = fig.add_subplot(2,2,3)

ax4 = fig.add_subplot(2,2,4)

# Create distribution plots for Hits, Home Runs, Years Played and All Star Games

sns.distplot(df_10['H'], ax = ax1, kde = True, axlabel = False, bins = 12)

ax1.set_title('Distribution of Hits')

sns.distplot(df_10['HR'], ax = ax2, kde = True, axlabel = False, bins = 8)

ax2.set_title('Distribution of Home Runs')

sns.distplot(df_10['years_playing'], ax = ax3, kde = False, axlabel = False, bins = 10)

ax3.set_title('Distribution of Years Playing')

ax3.set_ylabel('HoF Careers')

sns.distplot(df_10['years_allstar'], ax = ax4, kde = False, axlabel = False, bins = 8)

ax4.set_title('Distribution of Years Featured in All Star Game')1665

<matplotlib.text.Text at 0x17f197710>

There are 1675 non-HoF players in our data and it’s fair to say that most of them are less experienced players, which partly explains their lackluster statistics compared to the veterans who have made it to Cooperstown.

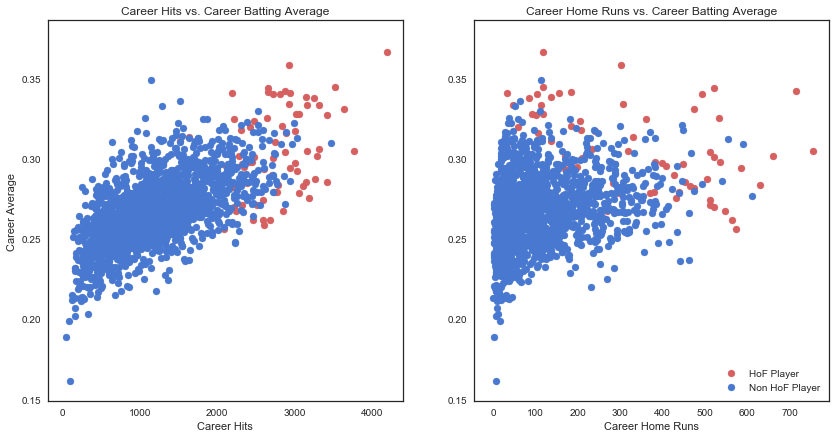

Next we want to see how Hits vs. Batting Average and Home Runs vs. Batting Average differ between HoF and non-HoF players.

# Initialize the figure and add subplots

fig = plt.figure(figsize=(14, 7))

ax1 = fig.add_subplot(1,2,1)

ax2 = fig.add_subplot(1,2,2)

# Create Scatter plots for Hits vs. Average and Home Runs vs. Average

ax1.scatter(df_hof['H'], df_hof['AVE'], c='r', label='HoF Player')

ax1.scatter(df_10['H'], df_10['AVE'], c='b', label='Non HoF Player')

ax1.set_title('Career Hits vs. Career Batting Average')

ax1.set_xlabel('Career Hits')

ax1.set_ylabel('Career Average')

ax2.scatter(df_hof['HR'], df_hof['AVE'], c='r', label='HoF Player')

ax2.scatter(df_10['HR'], df_10['AVE'], c='b', label='Non HoF Player')

ax2.set_title('Career Home Runs vs. Career Batting Average')

ax2.set_xlabel('Career Home Runs')

ax2.legend(loc='lower right', scatterpoints=1)<matplotlib.legend.Legend at 0x1834a5f28>

Suffice to say, it’s not surprising to see HoF players as high-achievers compared to their non-HoF teammates. There seem to be a positive correlation between career hits and career batting average regardless of HoF status, however the relationship is not as strong when it comes to home runs versus batting average.

With this we have answered the first question posed at the beginning of this post. To answer the second one, we need to build some machine learning model to predict whether an eligible player will ever be elected to the HoF.

Preparing Training and Test Data

Since a player must wait 5 years to become eligible for the HoF ballot, and can remain on the ballot for as many as 10 years then there are still eligible players who played their final season in the last 15 years. Hence those who played their last games in 2003 will be eligible for consideration in 2018 and so on.

# Filter `df` for players who retired more than 15 years ago

df_hitters = df[df['finalGame'] < 2002]

# Filter `df` for players who retired less than 15 years ago and for currently active players

df_eligible = df[df['finalGame'] >= 2002]

# Players who retired less than 15 years ago but more than 5 years ago and were inducted

early_inductees = df_eligible[df_eligible['HoF'] == 1]

# Remove these players from `df_eligible`

df_eligible = df_eligible[df_eligible['HoF'] != 1]

# Add these players to `df_hitters`

df_hitters = df_hitters.append(early_inductees)df_hitters is what we will use to train and test our model on since it contains statistics of past Hall of Famers while df_eligible is the “new” data consisting of eligible players that we would like to make predictions of.

print(len(df_hitters))

# Separate `df_hitters` into target (response) and features (predictors)

target = df_hitters['HoF']

features = df_hitters.drop(['playerID', 'nameFirst', 'nameLast', 'HoF'], axis=1)5487

Logistic Regression

The first model we’ll try is a Logistic Regression model and we’ll be using the Kfold cross-validation technique.

from sklearn.cross_validation import cross_val_predict, KFold

from sklearn.linear_model import LogisticRegression

# Create Logistic Regression model

lr = LogisticRegression(class_weight='balanced')

# Create an instance of the KFold class

kf = KFold(features.shape[0], random_state=1)

# Create predictions using cross validation

predictions_lr = cross_val_predict(lr, features, target, cv=kf)To determine accuracy, we need to compare our predictions to the target. The error metrics we’ll be using are counts and rates of True Positive (TP), False Positive (FP), and False Negative (FN), whose definitions are given below:

- True Positive: The player was predicted to be in the HoF and they are a HoF member.

- False Positive: The player was predicted to be in the HoF but they are not a HoF member.

- False Negative: The player was predicted to be not in the HoF but they are indeed a HoF member.

- True Negative: The player was predicted not to be in the HoF and they are not a HoF member.

From here, we can compute the rates as follows:

- True Positive rate: # True Positive / (# True Positive + # False Negative)

- False Negative rate: # False Negative / (# False Negative + # True Positive)

- False Positive rate: # False Positive / (# False Positive + # True Negative)

# Import NumPy as np

import numpy as np

# Convert predictions and target to NumPy arrays

np_predictions_lr = np.asarray(predictions_lr)

np_target = target.as_matrix()# Create a function to report TP, FP, and FN rates

def predAccuracy(predictions, target):

# Determine True Positive count

tp_filter = (predictions == 1) & (target == 1)

tp = len(predictions[tp_filter])

# Determine False Negative count

fn_filter = (predictions == 0) & (target == 1)

fn = len(predictions[fn_filter])

# Determine False Positive count

fp_filter = (predictions == 1) & (target == 0)

fp = len(predictions[fp_filter])

# Determine True Negative count

tn_filter = (predictions == 0) & (target == 0)

tn = len(predictions[tn_filter])

# Determine True Positive rate

tpr = tp / (tp + fn)

# Determine False Negative rate

fnr = fn / (fn_lr + tp)

# Determine False Positive rate

fpr = fp / (fp + tn)

# Print each count

print("True Positive Count: {0}".format(tp))

print("False Negative Count: {0}".format(fn))

print("False Positive Count: {0}".format(fp))

# Print each rate

print("True Positive Rate: {0:6.4f}".format(tpr))

print("False Negative Rate: {0:6.4f}".format(fnr))

print("False Positive Rate: {0:6.4f}".format(fpr))# Accuracy rates of logistic regression model

predAccuracy(np_predictions_lr, np_target)True Positive Count: 60

False Negative Count: 12

False Positive Count: 35

True Positive Rate: 0.8333

False Negative Rate: 0.1667

False Positive Rate: 0.0065

Random Forest

What we’re trying to answer is a classic example of a classification problem, and it would be a crime not to mention random forest algorithm at some point. In the following we’ll see how this algorithm stacks up against the logistic regression model.

# Import RandomForestClassifier from sklearn

from sklearn.ensemble import RandomForestClassifier

# Create penalty dictionary

penalty = {

0: 100,

1: 1

}

# Create Random Forest model

rf = RandomForestClassifier(random_state=1,n_estimators=12, max_depth=11, min_samples_leaf=1, class_weight=penalty)

# Create predictions using cross validation

predictions_rf = cross_val_predict(rf, features, target, cv=kf)

# Convert predictions to NumPy array

np_predictions_rf = np.asarray(predictions_rf)# Accuracy rates of random forest model

predAccuracy(np_predictions_rf, np_target)True Positive Count: 51

False Negative Count: 21

False Positive Count: 8

True Positive Rate: 0.7083

False Negative Rate: 0.3333

False Positive Rate: 0.0015

Although the random forest is less accurate, predicting only 51 of 72 Hall of Famers, its FN and FP counts are far fewer. Hence it will be the model of choice to make our predictions.

Making predictions

We’ll use the trained and tested random forest model to make predictions on the probability of getting voted into the HoF for each player in df_eligible and then print out 50 players who have the highest chance of doing so. This will also answer our second question.

# Create a new features DataFrame

new_features = df_eligible.drop(['playerID', 'nameFirst', 'nameLast', 'HoF'], axis=1)

# Fit the Random Forest model

rf.fit(features, target)

# Estimate probabilities of Hall of Fame induction

probabilities = rf.predict_proba(new_features)

# Convert predictions to a DataFrame

hof_predictions = pd.DataFrame(probabilities[:,1])

# Sort the DataFrame (descending)

hof_predictions = hof_predictions.sort_values(0, ascending=False)

hof_predictions.rename(columns = {0:'prob'}, inplace = True)

# Merge the prediction with new_data

new_data.index = range(len(new_data))

new_data.head()

hof_predictions = hof_predictions.join(new_data, how = 'left')

hof_predictions.index = range(len(hof_predictions))

hof_predictions.head(50)| prob | playerID | nameFirst | nameLast | bats_R | throws_R | finalGame | AB | R | H | ... | G_ss_percent | G_lf_percent | G_cf_percent | G_rf_percent | G_of_percent | G_dh_percent | AVE | OBP | Slug_Percent | OPS | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1.000000 | ortizda01 | David | Ortiz | 0 | 0 | 2016 | 8640.0 | 1419.0 | 2472.0 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.842608 | 0.286111 | 0.379447 | 0.551505 | 0.930952 |

| 1 | 1.000000 | ramirma02 | Manny | Ramirez | 1 | 1 | 2011 | 8244.0 | 1544.0 | 2574.0 | ... | 0.000000 | 0.450478 | 0.000000 | 0.392702 | 0.841877 | 0.144222 | 0.312227 | 0.410477 | 0.585395 | 0.995872 |

| 2 | 1.000000 | cabremi01 | Miguel | Cabrera | 1 | 1 | 2016 | 7853.0 | 1321.0 | 2519.0 | ... | 0.000000 | 0.118321 | 0.000000 | 0.047710 | 0.165553 | 0.037214 | 0.320769 | 0.398511 | 0.562078 | 0.960589 |

| 3 | 1.000000 | pujolal01 | Albert | Pujols | 1 | 1 | 2016 | 9138.0 | 1670.0 | 2825.0 | ... | 0.000412 | 0.110882 | 0.000000 | 0.016488 | 0.127370 | 0.139736 | 0.309149 | 0.392248 | 0.572554 | 0.964802 |

| 4 | 1.000000 | jeterde01 | Derek | Jeter | 1 | 1 | 2014 | 11195.0 | 1923.0 | 3465.0 | ... | 0.973426 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.026574 | 0.309513 | 0.374306 | 0.439571 | 0.813877 |

| 5 | 1.000000 | jonesch06 | Chipper | Jones | 0 | 1 | 2012 | 8984.0 | 1619.0 | 2726.0 | ... | 0.019608 | 0.142457 | 0.000000 | 0.003601 | 0.145658 | 0.011204 | 0.303428 | 0.400980 | 0.529274 | 0.930254 |

| 6 | 1.000000 | vizquom01 | Omar | Vizquel | 0 | 1 | 2012 | 10586.0 | 1445.0 | 2877.0 | ... | 0.912736 | 0.000337 | 0.000000 | 0.000337 | 0.000674 | 0.002358 | 0.271774 | 0.329143 | 0.352069 | 0.681212 |

| 7 | 1.000000 | heltoto01 | Todd | Helton | 0 | 0 | 2013 | 7962.0 | 1401.0 | 2519.0 | ... | 0.000000 | 0.005785 | 0.000000 | 0.000890 | 0.006676 | 0.000890 | 0.316378 | 0.413862 | 0.539061 | 0.952923 |

| 8 | 1.000000 | abreubo01 | Bobby | Abreu | 0 | 1 | 2014 | 8480.0 | 1453.0 | 2470.0 | ... | 0.000000 | 0.058557 | 0.008660 | 0.820619 | 0.881649 | 0.066392 | 0.291274 | 0.394703 | 0.474764 | 0.869467 |

| 9 | 1.000000 | beltrad01 | Adrian | Beltre | 1 | 1 | 2016 | 10295.0 | 1428.0 | 2942.0 | ... | 0.002574 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.030882 | 0.285770 | 0.337833 | 0.479845 | 0.817678 |

| 10 | 1.000000 | gonzalu01 | Luis | Gonzalez | 0 | 1 | 2008 | 9157.0 | 1412.0 | 2591.0 | ... | 0.000000 | 0.933230 | 0.003474 | 0.008491 | 0.942107 | 0.010807 | 0.282953 | 0.366252 | 0.478869 | 0.845121 |

| 11 | 0.916705 | thomeji01 | Jim | Thome | 0 | 1 | 2012 | 8422.0 | 1583.0 | 2328.0 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.321667 | 0.276419 | 0.401823 | 0.554144 | 0.955967 |

| 12 | 0.916667 | kentje01 | Jeff | Kent | 1 | 1 | 2008 | 8498.0 | 1320.0 | 2461.0 | ... | 0.001305 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.003046 | 0.289598 | 0.355143 | 0.499647 | 0.854790 |

| 13 | 0.916667 | guerrvl01 | Vladimir | Guerrero | 1 | 1 | 2011 | 8155.0 | 1328.0 | 2590.0 | ... | 0.000000 | 0.000466 | 0.000932 | 0.747555 | 0.748952 | 0.236609 | 0.317597 | 0.378629 | 0.552544 | 0.931173 |

| 14 | 0.833417 | gonzaju03 | Juan | Gonzalez | 1 | 1 | 2005 | 6556.0 | 1061.0 | 1936.0 | ... | 0.000000 | 0.216696 | 0.149201 | 0.449378 | 0.776199 | 0.217880 | 0.295302 | 0.343117 | 0.560708 | 0.903824 |

| 15 | 0.833333 | beltrca01 | Carlos | Beltran | 0 | 1 | 2016 | 9301.0 | 1522.0 | 2617.0 | ... | 0.000000 | 0.000814 | 0.639805 | 0.256410 | 0.893773 | 0.082621 | 0.281368 | 0.353165 | 0.491560 | 0.844725 |

| 16 | 0.750093 | walkela01 | Larry | Walker | 0 | 1 | 2005 | 6907.0 | 1355.0 | 2160.0 | ... | 0.000000 | 0.016600 | 0.034708 | 0.864185 | 0.907445 | 0.013581 | 0.312726 | 0.399875 | 0.565224 | 0.965099 |

| 17 | 0.750093 | camermi01 | Mike | Cameron | 1 | 1 | 2011 | 6839.0 | 1064.0 | 1700.0 | ... | 0.000000 | 0.003069 | 0.914066 | 0.083887 | 0.982609 | 0.005627 | 0.248574 | 0.336631 | 0.443778 | 0.780409 |

| 18 | 0.750079 | tejadmi01 | Miguel | Tejada | 1 | 1 | 2013 | 8434.0 | 1230.0 | 2407.0 | ... | 0.896361 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.012437 | 0.285392 | 0.334891 | 0.455537 | 0.790428 |

| 19 | 0.750079 | ramirar01 | Aramis | Ramirez | 1 | 1 | 2015 | 8136.0 | 1098.0 | 2303.0 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.011851 | 0.283063 | 0.340864 | 0.492134 | 0.832997 |

| 20 | 0.750076 | ordonma01 | Magglio | Ordonez | 1 | 1 | 2011 | 6978.0 | 1076.0 | 2156.0 | ... | 0.000000 | 0.000000 | 0.014610 | 0.926948 | 0.933442 | 0.056277 | 0.308971 | 0.368380 | 0.502436 | 0.870816 |

| 21 | 0.750070 | renteed01 | Edgar | Renteria | 1 | 1 | 2011 | 8142.0 | 1200.0 | 2327.0 | ... | 0.982342 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000929 | 0.285802 | 0.339290 | 0.398059 | 0.737349 |

| 22 | 0.750070 | damonjo01 | Johnny | Damon | 0 | 0 | 2012 | 9736.0 | 1668.0 | 2769.0 | ... | 0.000000 | 0.274699 | 0.521285 | 0.059036 | 0.830120 | 0.149398 | 0.284408 | 0.350096 | 0.432827 | 0.782923 |

| 23 | 0.750070 | jonesan01 | Andruw | Jones | 1 | 1 | 2012 | 7599.0 | 1204.0 | 1933.0 | ... | 0.000000 | 0.051002 | 0.785064 | 0.102004 | 0.930328 | 0.048725 | 0.254376 | 0.337142 | 0.485590 | 0.822732 |

| 24 | 0.750070 | anderga01 | Garret | Anderson | 0 | 0 | 2010 | 8640.0 | 1084.0 | 2529.0 | ... | 0.000000 | 0.622531 | 0.181329 | 0.072711 | 0.858618 | 0.106373 | 0.292708 | 0.323199 | 0.461111 | 0.784310 |

| 25 | 0.750061 | delgaca01 | Carlos | Delgado | 0 | 1 | 2009 | 7283.0 | 1241.0 | 2038.0 | ... | 0.000000 | 0.028501 | 0.000000 | 0.000000 | 0.028501 | 0.090909 | 0.279830 | 0.383389 | 0.545929 | 0.929318 |

| 26 | 0.750056 | suzukic01 | Ichiro | Suzuki | 0 | 1 | 2016 | 9689.0 | 1396.0 | 3030.0 | ... | 0.000000 | 0.038800 | 0.124800 | 0.780400 | 0.927200 | 0.020800 | 0.312726 | 0.354481 | 0.404583 | 0.759064 |

| 27 | 0.750039 | francju01 | Julio | Franco | 1 | 1 | 2007 | 8677.0 | 1285.0 | 2586.0 | ... | 0.282153 | 0.001583 | 0.000000 | 0.000396 | 0.001583 | 0.148397 | 0.298029 | 0.363889 | 0.417195 | 0.781083 |

| 28 | 0.750038 | mcgrifr01 | Fred | McGriff | 0 | 0 | 2004 | 8757.0 | 1349.0 | 2490.0 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.071138 | 0.284344 | 0.376843 | 0.509078 | 0.885921 |

| 29 | 0.666786 | leeca01 | Carlos | Lee | 1 | 1 | 2012 | 7983.0 | 1125.0 | 2273.0 | ... | 0.000000 | 0.843259 | 0.000000 | 0.000000 | 0.843259 | 0.039066 | 0.284730 | 0.338607 | 0.482776 | 0.821383 |

| 30 | 0.666770 | willibe02 | Bernie | Williams | 0 | 1 | 2006 | 7869.0 | 1366.0 | 2336.0 | ... | 0.000000 | 0.004335 | 0.894027 | 0.029865 | 0.926782 | 0.062139 | 0.296861 | 0.380426 | 0.477316 | 0.857742 |

| 31 | 0.666769 | rolensc01 | Scott | Rolen | 1 | 1 | 2012 | 7398.0 | 1211.0 | 2077.0 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.280752 | 0.364287 | 0.490403 | 0.854690 |

| 32 | 0.666769 | cabreor01 | Orlando | Cabrera | 1 | 1 | 2011 | 7562.0 | 985.0 | 2055.0 | ... | 0.928463 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.002519 | 0.271754 | 0.315082 | 0.389712 | 0.704793 |

| 33 | 0.666761 | aloumo01 | Moises | Alou | 1 | 1 | 2008 | 7037.0 | 1109.0 | 2134.0 | ... | 0.000000 | 0.639547 | 0.051493 | 0.310505 | 0.959835 | 0.011843 | 0.303254 | 0.368887 | 0.515703 | 0.884589 |

| 34 | 0.666761 | durhara01 | Ray | Durham | 0 | 1 | 2008 | 7408.0 | 1249.0 | 2054.0 | ... | 0.000000 | 0.000000 | 0.000506 | 0.000000 | 0.000506 | 0.027342 | 0.277268 | 0.349757 | 0.435745 | 0.785502 |

| 35 | 0.666761 | loftoke01 | Kenny | Lofton | 0 | 0 | 2007 | 8120.0 | 1528.0 | 2428.0 | ... | 0.000000 | 0.023776 | 0.943414 | 0.004755 | 0.970518 | 0.005706 | 0.299015 | 0.368746 | 0.422783 | 0.791529 |

| 36 | 0.666761 | martied01 | Edgar | Martinez | 1 | 1 | 2004 | 7213.0 | 1219.0 | 2247.0 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.682725 | 0.311521 | 0.417320 | 0.515458 | 0.932778 |

| 37 | 0.666760 | edmonji01 | Jim | Edmonds | 0 | 0 | 2010 | 6858.0 | 1251.0 | 1949.0 | ... | 0.000000 | 0.030830 | 0.879165 | 0.023869 | 0.928394 | 0.010443 | 0.284194 | 0.375439 | 0.527122 | 0.902560 |

| 38 | 0.666760 | leede02 | Derrek | Lee | 1 | 1 | 2011 | 6962.0 | 1081.0 | 1959.0 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.004119 | 0.281385 | 0.364561 | 0.494685 | 0.859247 |

| 39 | 0.666746 | hunteto01 | Torii | Hunter | 1 | 1 | 2015 | 8857.0 | 1296.0 | 2452.0 | ... | 0.000000 | 0.007167 | 0.642074 | 0.305228 | 0.951096 | 0.039629 | 0.276843 | 0.331201 | 0.461443 | 0.792644 |

| 40 | 0.666738 | olerujo01 | John | Olerud | 0 | 0 | 2005 | 7592.0 | 1139.0 | 2239.0 | ... | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.059534 | 0.294916 | 0.397440 | 0.464963 | 0.862403 |

| 41 | 0.666738 | grissma02 | Marquis | Grissom | 1 | 1 | 2005 | 8275.0 | 1187.0 | 2251.0 | ... | 0.000000 | 0.039261 | 0.908545 | 0.024480 | 0.964434 | 0.000924 | 0.272024 | 0.316442 | 0.414502 | 0.730943 |

| 42 | 0.666737 | grudzma01 | Mark | Grudzielanek | 1 | 1 | 2010 | 7052.0 | 946.0 | 2040.0 | ... | 0.347392 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.001110 | 0.289280 | 0.330001 | 0.393222 | 0.723223 |

| 43 | 0.666737 | ibanera01 | Raul | Ibanez | 0 | 1 | 2014 | 7471.0 | 1055.0 | 2034.0 | ... | 0.000000 | 0.678852 | 0.000925 | 0.079130 | 0.750116 | 0.143915 | 0.272253 | 0.335347 | 0.465132 | 0.800479 |

| 44 | 0.666737 | konerpa01 | Paul | Konerko | 1 | 1 | 2014 | 8393.0 | 1162.0 | 2340.0 | ... | 0.000000 | 0.007663 | 0.000000 | 0.000000 | 0.007663 | 0.146871 | 0.278804 | 0.354024 | 0.486477 | 0.840501 |

| 45 | 0.666729 | gilesbr02 | Brian | Giles | 0 | 0 | 2009 | 6527.0 | 1121.0 | 1897.0 | ... | 0.000000 | 0.349756 | 0.164050 | 0.479155 | 0.956145 | 0.021657 | 0.290639 | 0.399617 | 0.502375 | 0.901992 |

| 46 | 0.666706 | finlest01 | Steve | Finley | 0 | 0 | 2007 | 9397.0 | 1443.0 | 2548.0 | ... | 0.000000 | 0.013937 | 0.895858 | 0.073171 | 0.962447 | 0.005420 | 0.271150 | 0.329350 | 0.442375 | 0.771725 |

| 47 | 0.666673 | polanpl01 | Placido | Polanco | 1 | 1 | 2013 | 7214.0 | 1009.0 | 2142.0 | ... | 0.063311 | 0.002595 | 0.000000 | 0.000000 | 0.002595 | 0.001557 | 0.296923 | 0.338828 | 0.397283 | 0.736111 |

| 48 | 0.666667 | kotsama01 | Mark | Kotsay | 0 | 0 | 2013 | 6464.0 | 790.0 | 1784.0 | ... | 0.000000 | 0.032393 | 0.528736 | 0.242424 | 0.792059 | 0.030825 | 0.275990 | 0.330708 | 0.404394 | 0.735101 |

| 49 | 0.666667 | matthga02 | Gary | Matthews | 0 | 1 | 2010 | 4103.0 | 612.0 | 1056.0 | ... | 0.000000 | 0.141296 | 0.583138 | 0.227166 | 0.903201 | 0.021077 | 0.257373 | 0.330951 | 0.405313 | 0.736264 |

50 rows × 58 columns

Limitations

While it’s nice to be able to tell who are likely to get elected in future ballots, this is a naïve model: we don’t predict the career trajectories of current players. We simply ask: if they retired now, and we relax the 10-year minimum requirement, would their statistical output qualify them for the Hall of Fame based on what we’ve seen voters do in the past?

This brings us to a related question: how much would steroid suspicions hurt the chance of getting in? Take Ivan Rodriguez for example. Despite allegations of injections of PED in 2003, he nevertheless made it in to the HoF in 2016. Along with cases for Tim Raines and Jeff Bagwell, who were also suspected of steroid use, this proves voters do forgive, but on what conditions and to what extent we never know.

References

- Lahman’s Baseball Database

- Scikit-Learn Tutorial: Baseball Analytics in Python Pt 2

- Hall of Famers - Rules for Election

- What Are the Major Eras of Major League Baseball History?

- List of people banned from Major League Baseball

- These 11 Players’ Hall of Fame Inductions Have Been Sabotaged by Steroid Allegations and Admissions

- Top 15 Baseball Players Who Have Used Performance Enhancing Drugs

- Hall of Fame Classification Using Random Forest